How We Kept Up With Kylie Jenner

Working on Media Temple’s Amazon Web Services (AWS) Cloud Services team is packed with challenges and learning opportunities. One such opportunity dropped on our doorstep at the beginning of November 2015. Kylie Jenner, the youngest member of the Kardashian-Jenner family, was preparing to share a new line of lipstick with the world on Cyber Monday, and she needed our help.

The chosen technology platforms were WordPress and WooCommerce, and we learned that the site design and set up had already been completed. They just needed a place to host http://lipkitbykylie.com/. The problem was that, while we ♥ WordPress, and it has has plenty of capabilities when it comes to publishing content, it’s not the best framework for use in a flash-sale situation. We recommended switching to other, more scalable solutions, but a redesign would have been very risky in light of the on-sale date. So the problem switched to a question of if and how we could scale an infrastructure up to handle the inevitable tidal wave of requests.

At the core of the scalability problem was the single database requirement. All transactions had to update and reconcile against a single database instance for consistency ‒ selling more stock than they had would have been a big problem! Initial testing showed that the site quickly overwhelmed the database when even a moderate amount of traffic was thrown at it. In order to survive the rush, we needed to strengthen the database and remove any unnecessary requests.

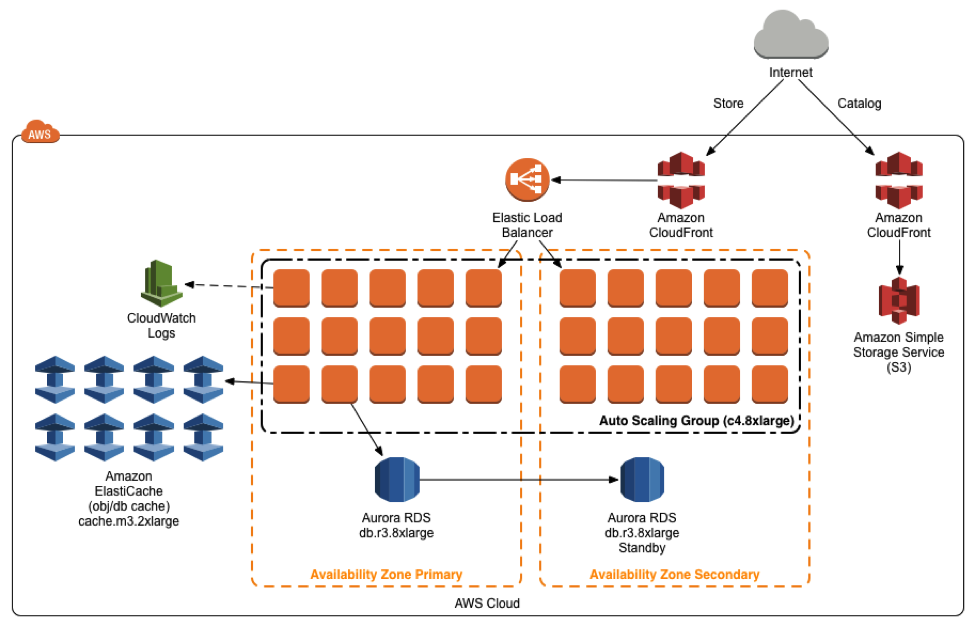

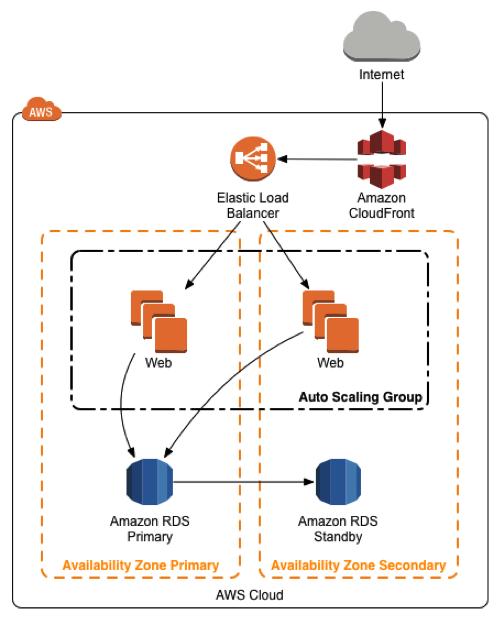

We deployed their codebase into a highly available auto-scaling architecture, with web instances running the site code in front of an RDS database pair.

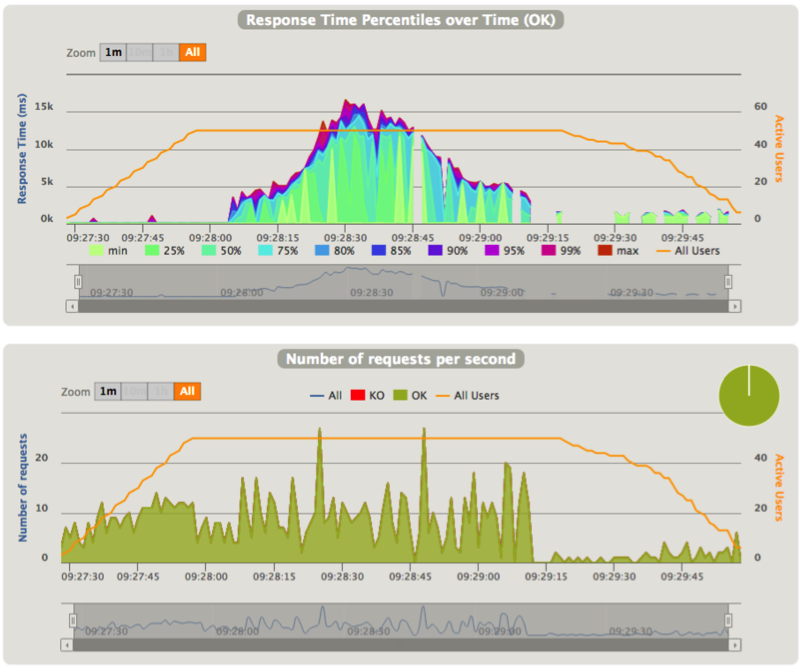

Then, we started load testing the infrastructure. We utilized Gatling for its ability to model complex, multi-page interactions and the rich reports that it produced.

We started by capturing a user flow with the Gatling recorder and ran it against the infrastructure. If the infrastructure withstood the onslaught, we would increase the number of sessions. If not, we would optimize the infrastructure until it held up. By iterating between these tasks, we were quickly able to learn where bottlenecks were and massively scale up the capability of the infrastructure.

Separation of Concerns

To start, we instructed the customer to clone the existing site. The original site retained the WooCommerce plugin and functionality, and became just a store with cart and checkout. The site clone was stripped of those plugins and reworked to become just a catalog, with each product linking directly to the equivalent “add to cart” functionality on the store site. This made the catalog completely static and easy to scale. We used HTTrack to scrape the website into an S3 bucket, which was then distributed via CloudFront.

With this change, a significant portion of the database requests were eliminated. The catalog, which is the first site customers would hit, could now scale.

Nginx Tuning

During initial load testing we quickly found that WordPress also requires a decent amount of processing power. An increase in the size and number of web instances was necessary. We also switched from Apache to Nginx, and raised the number of PHP workers to handle more requests per instance.

With this change, the web instances were able to keep up with the higher number of requests that the larger databases could handle after our previous optimizations.

Caching

Next up, we turned our attention to internal WordPress caching to alleviate database traffic. We created an Elasticache cluster and W3 Total Cache was configured to cache database queries and objects. This only resulted in a marginal improvement due to the fact that most of the WooCommerce pages explicitly disable caching for consistency.

Amazon Aurora

Since we didn’t get much out of caching, we ended up provisioning the largest MySQL database available. When that wasn’t up to the task, we switched over to Aurora, the new high-performance MySQL-compatible offering from AWS. We quickly moved to the largest instance and it performed very well. We saw it hit 35K selects per second during peak load on Cyber Monday.

Final Production Infrastructure

In the end, we were able to improve performance over 160x, and hit our testing goal of 100K user sessions (each with multiple requests) in 10 minutes. Unfortunately, though we tried again and again, it was difficult to push past that level, given the software technology platform (WordPress and WooCommerce).

After more than a month of preparation, we opened the floodgates at 9:00 AM on Cyber Monday. As orders quickly flowed into the system and stock flew off the virtual shelves, the infrastructure performed quite well. Traffic rapidly increased to a tidal wave that went beyond what even our 160X improvement could hold, however, and requests took longer and longer to complete. Although some users were unable to access the checkout cart, the stock was sold out so quickly that we were able to switch traffic from the web instances to a static ‘out of stock’ site within minutes, which resolved the long request times. The event was complete.

It was a great learning experience, and Kylie Jenner and her team were thrilled with the outcomes. Through the entire process, including long hours on Thanksgiving weekend, AWS support was fantastic, working with us every step of the way. The load testing and infrastructure adjustments wouldn’t have been possible without the capacity, agility, and performance that AWS provided.